Recently, a member of the Department of Psychology at the University of Auckland in New Zealand, Dr Quentin D. Atkinson, published an article discussing some results of his in one of his principal fields of interest: “Evolution of language, religion, sustainability and large-scale cooperation, and the human expansion from Africa”1. The data that he worked with indicates that language among humans began once in Africa, and from there spread with the spread of humans across the world, fracturing into about six thousand languages.

The idea that all languages sprang from a single language is not really a new idea:

Genesis 11

1. And the whole earth was of one language and of one speech.

2. And it came to pass, as they journeyed from the east, that they found a plain in the land of Shi-nar; and they dwelt there.

3. And they said one to another, Go to, let us make brick, and burn them thoroughly. And they had brick for stone, and slime had they for morter.

4. And they said, Go to, let us build us a city and a tower, whose top may reach unto heaven; and let us make us a name, lest we be scattered abroad upon the face of the whole earth.

5. And the Lord came down to see the city and the tower, which the children of men builded.

6. And the Lord said, Behold, the people is one, and they have all one language; and this they begin to do: and nothing will be restrained from them, which they have imagined to do.

7. Go to, let us go down, and there confound their language, that they may not understand one another’s speech.

8. So the Lord scattered them abroad from thence upon the face of all the earth: and they left off to build the city.

9. Therefore is the name of it called Babel; because the Lord did there confound the language of all the earth: and from thence did the Lord scatter them abroad upon the face of all the earth.

Holy Bible, King James version, Genesis, Ch. 11

I am not a biblical scholar, nor much of a believer as well, so when I notice a few gaps in the narrative which have doubtlessly been explained by scholars and believers, I try to ignore them. I just wonder who the Lord is talking to (angels, seraphim, cherubs, others?) in verse 6 and 7, using the collective pronoun – “let us go down, and there confound their language” – and then wonder why only the Lord is mentioned in the acts of scattering and confounding. Oh, well, I believe it’s destined to remain yet another mystery.

Linguists appear to have proceeded in exactly the opposite direction: from the fact that there are many different languages, with different word orders, grammars, sounds, etc., sufficient commonalities have been found to enable the classification of languages into families. The great unification of languages from India with languages from Europe, attributed to Sir William Jones in 1786, though some of the scholarship that enabled that discovery was begun somewhat earlier by others, found that a substantial number of languages derived from a single root: the proto-Indo-European language.

Since then, other language families have been described, though not without controversy. The linguists can trace various kinds of changes, from sound changes to case-ending changes to word order changes. They look at meanings: a word that means something in one language is compared to the word that means the same thing in another, and the common sounds can be used to define their relationship. Their work is brilliant and difficult – having tried to learn a few languages other than English lets me make that statement, since I have been spectacularly unsuccessful. Eh bien.

In the last century, work has proceeded on the unification of languages into families, but, according The First Word, by Christine Kenneally:

…the search for the origins of language was formally banned from the ivory tower in the nineteenth century and was considered disreputable for more than a century. The explanation given to me in a lecture hall in late-twentieth-century Australia had been handed down from teacher to student for the most part unchallenged since 1866, when the Societe de Linguistique of Paris declared a moratorium on the topic. These learned gentlemen decreed that seeking the origins of language was a futile endeavor because it was impossible to prove how it came about. Publication on the subject was banned.[2]

The work of linguists has been to look at relationships between languages and tried to establish which were related to which. After the ban is described by Ms. Kenneally:

…most linguists were field linguists, researchers who journeyed into uncharted territory and broke bread with the inhabitants. They had no dictionary or phrase book but learned the local language, working out how verbs connect with objects and subjects, and how all types of meaning are conveyed. … When they transcribe a language for the first time, they create a rigorous catalog of sounds, words and parts of speech, called the grammar of the language. Once this is completed they match one catalog to another – finding evidence of family relationships between languages. Grammar writers are meticulous and diligent, arranging and rearranging the specimens of language into a lucid system.[3]

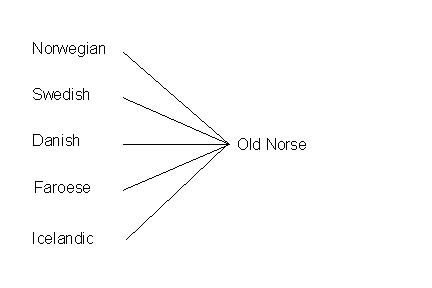

Once the relationships were established, then the language from which the related languages came from could potentially be described, and the relationships could be mapped in tree diagrams. An example of the kind of tree diagrams that results from this work is below, showing the current languages that appear to have come from a common, older source:

The attempt has been made to take the tree diagrams from existing languages back to earlier languages, but since the 1866 declaration in Paris, the work of linguists has been oriented toward explanations of the trees and grouping of families, without speculating on the original mother language, at least publicly. Until the late 1950s and early 1960s, that is, at which point, Noam Chomsky made all linguists rethink their methodology with his universal transformative grammar ideas which provided a way to think about all languages as if they were a product a similar way of constructing meaning. Chomsky himself, however, discouraged the idea of looking at language from an evolutionary standpoint. A statement of his, quoted in The First Word, is “…that it is ‘hard to imagine a course of selection that could have resulted in language.'”[4]

There have been workers in linguistic analysis who have focused on understanding languages of non-human species – chimps, apes and parrots, with some of their successes contributing to the character of language, its limits and requirements. Others have looked at the physiological basis for producing language: one notable controversy has been whether or not Neanderthals were physiologically equipped to produce the sounds that would have been used for language by their contemporaries, the Cro-Magnons, and the subsequent Homo Sapiens. Some others have focused on the neurological basis of language production, notably in mapping the brain and trying to understand how the brain and its assemblage of neurons actually matches sounds to meanings.

Those who have been cataloging languages have developed a set of resources which now reside online, one facet of which is a database of all the sounds produced in all of the languages that have been catalogued, called “The World Atlas of Language Structures” (see below).

But, until 1990, when Stephen Pinker and Paul Bloom published a paper suggesting that the study of the evolution of language was not only possible but necessary, the door to publication of language evolution ideas was closed, and along with it, the door to speculation on “the mother language”. After the paper, all sorts of approaches were ‘legitimized’, although not without controversies.

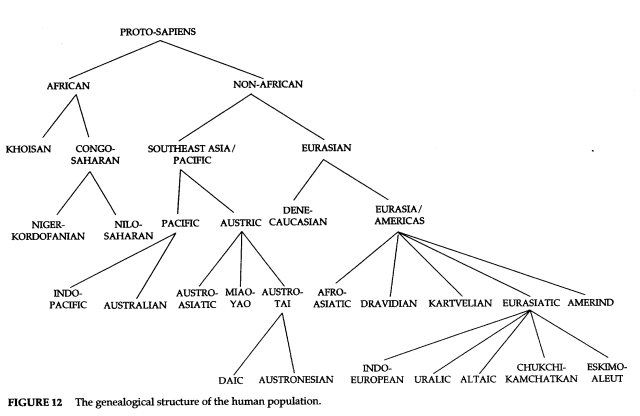

One of the controversial hypotheses was that developed by Merritt Ruhlen, who published a book in 1994 called The Origin of Language. He built a case for a single origin of human development, and created a tree diagram to show the relationships stemming from the original humans. His tree parallels the way that he presents language as possibly having developed.

In the book, though, he caveats this diagram as not being “…immune from change as the investigation of human prehistory proceeds.”[5]

Meanwhile, the classical linguists continued to perform the yeoman’s work of trying to develop grammars of all the languages on earth – which are evidently disappearing at an alarming rate. As a repository for this kind of information, an online database has been developed:

The World Atlas of Language Structures (WALS) is a large database of structural (phonological, grammatical, lexical) properties of languages gathered from descriptive materials (such as reference grammars) by a team of 55 authors (many of them the leading authorities on the subject).

…

WALS Online is a joint effort of the Max Planck Institute for Evolutionary Anthropology and the Max Planck Digital Library.[6]

WALS currently has datapoints for 2678 of the approximately 6000 languages in the world.

Crunching data from databases to derive information is an activity that has become ubiquitous and problematical – there is so much data available in so many different fields that suitable means for crunching it all remains an unfulfilled quest in most of those fields. In Volume 331 of SCIENCE, 11 February 2011, there is a special section with almost 20 articles about the current surfeit of data.

Dr. Atkinson appears to have cut the gordion knot of the language problem by looking at WALS, selecting a global sample of 504 languages, and counting the number of phonemes in each without trying to match the sounds or sound changes. Then he related the languages and their number of phonemes to geographical distribution and found that the languages with the largest number of phonemes were in Africa, and that the number of phonemes per language fell off the further from Africa humans appear to have migrated. The languages with the smallest number of phonemes were found in South America and Oceania. This pattern is similar to that which Merritt Ruhlen described (see above).

This matches closely with the serial founder effect that has been used to explain the distribution of gene diversity, work done by, among others, Luigi Luca Cavelli-Sforza, described in his book, Genes, Peoples, and Languages, published in 2000: the greatest genetic diversity is found in Africa, and diminishes in a similar pattern the further from Africa one looks. The “serial founder effect” is based on the idea that the core origin population will have sufficient time and members for its genetic makeup to diversify, while small populations that leave the core population will take with them only a subset of those genes, and even as it grows, will have had fewer members and less time to diversify. And if one of those smaller populations splits, the “serial” effect occurs in that the split will provide another genetic bottleneck.

I have not followed the articles subsequent to Dr. Atkinson’s, in which I expect very smart linguists, geneticists, and psychologists may have pointed out inconsistencies or taken issue with his methodology. I found his article to be a model of clarity, however, and while I still have doubts, they are hardly informed ones. I was impressed, though, with his method, which was to take a set of data that no one had looked at in quite the way that he had, and was able to come up with clever measures that gave a new way to reach a conclusion and support an hypothesis. His analysis is persuasive.

As an aside, when the article in the New York Times appeared describing Dr. Atkinson’s article and work, there was a long thread of reactions to his hypothesis, many of which would have benefited from having actually read Dr. Atkinson’s article before delivering an apparently knee-jerk reaction. And while I have just covered the surface of this, the books that I have mentioned and the article in SCIENCE are worth the effort to read and comprehend, in understanding the measurement and analysis of language.

The article and books referred to:

Atkinson, Quentin D., “Phonemic Diversity Supports a Serial Founder Effect Model of Language Expansion from Africa”, SCIENCE, 15 April 2011, Vol 332, no. 6027 pp. 346-349, plus online supporting material at

www.sciencemag.org/cgi/content/full/332/6027/346/DC1

Cavalli-Sforza, Luigi Luca, Genes, Peoples, and Languages, translated by Mark Seielstad, University of California Press, Berkeley, CA, 2000.

Kenneally, Christine, The First Word: The Search for the Origins of Language, Penguin Books, New York, N.Y., 2007.

Ruhlen, Merritt, The Origin of Language, Tracing the Evolution of the Mother Tongue, John Wiley & Sons, Inc., New York, N.Y., 1994.

And of course,

Holy Bible, King James version, Genesis, Ch. 11, National Bible Press, Philadelphia, PA, copyright 1958, originally published 1611 in London, England.

[2] Kenneally, Christine, The First Word: The Search for the Origins of Language, Penguin Books, New York, N.Y., 2007. p. 7.

[3] Ibid, p.26.

[4] Ibid, p.39. From the text in The First Word, it is not clear where Chomsky’s quote has come from.

[5] Ruhlen, Merritt, The Origin of Language, Tracing the Evolution of the Mother Tongue, John Wiley & Sons, Inc., New York, N.Y., 1994. Diagram on p 192, statement on p. 193.